At least since the days of Isaac Asimov’s famous book, I Robot, and possibly even far before, mankind has been obsessed with the idea of artificial intelligence (AI). In 1956, John McCarthy coined the term “artificial intelligence”, describing it plainly as “the science and engineering of making intelligent machines.” But frankly, that definition is a bit vague. What exactly is artificial intelligence?

Today, many common definitions of artificial intelligence center on mankind, describing AI as the science of making computers and machines able to mimic humans, displaying cognitive functions, like perception, reasoning, learning, and problem-solving. But this definition has its flaws.

The issue with centering humans when pursuing AI is the bias it introduces. Similar to when humans thought earth was the center of the universe, it has been demonstrated that we have the tendency to believe that everything revolves around us (literally and figuratively). This habit of ours leads us to make assumptions about the universe that aren’t necessarily true, and even fight for these assumptions in the face of new evidence (see Copernicus).

In the case of AI, centering the human as the pinnacle of intelligence not only caps AI’s potential to our limitations, but has a significant influence on how we approach developing these thinking machines.

It limits AI research by leading even some of the most senior researchers in the field to believe that artificial general intelligence (AGI), or a general problem solver, is not achievable. Some seem to believe that if we ‘superior minded’ humans do not have this quality, then it must be impossible. Others follow the logic that something without general intelligence cannot create something with it, similar to the way that some scientists believe that intelligence and consciousness cannot simply emerge from matter.

A human-centric view also has implications for how AI research is approached. We have seen this illustrated in the various approaches to AI in the past. Some argue that a big issue in the field of AI research has been gauging progress because we don’t really have a clear and agreed upon definition of our objective.

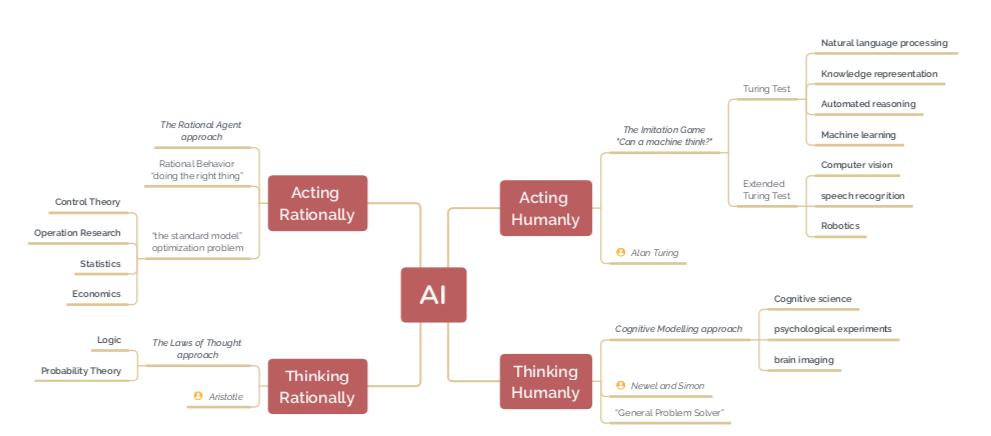

In 1995, Russel and Norvig proposed four viable approaches to AI. Creating machines that either:

- act humanly,

- think humanly,

- act rationally,

- or think rationally

As one might imagine, these approaches can be interpreted in a number of ways, and the way the problem is framed has influence on the solutions proposed.

Alan Turing, who is often called the father of theoretical computer science and AI, proposed a thought experiment when pondering intelligence in machines. This experiment, known as the Turing test, goes as such: A blind interview takes place between you and a responder. You ask a series of questions and receive responses from this anonymous participant. The idea is that a computer passes the Turing test if you, a human interrogator, cannot tell whether the answers to your questions come from a person or a computer.

To pass the test, the computer is required to have a number of capabilities such as:

- Natural language processing – to manage a natural and effective communication with human beings;

- Knowledge representation – to store the information it receives;

- Automated reasoning – to perform question answering and update the conclusion;

- And machine learning – to adjust to new situations and recognize new patterns.

Given Turing’s original thought experiment, the physical simulation of a human body is completely irrelevant to demonstrating intelligence. Other researchers, however, argue that embodiment is essential and they have suggested a complete Turing test that involves interaction with real-world objects and people. This would require the machine to be equipped with additional capabilities to pass the test such as computer vision and sensors and processors for audio recognition in order to see and hear the environment. There would also be an element of robotics required to allow the agent to move around and interact with the environment.

This illustrates how the words we use shape the way we see things. For instance, Turing believed computer intelligence could and likely would construct itself differently than human intelligence, but this fact didn’t make computer intelligence any less valid. So in his thought experiment, he was less concerned about how the computer is arriving at its perceived state of intelligence, and more so focused on how it is viewed by other intelligent agents it encounters.

But even his experiment has a little ego. The Turing test centers human judgment as the litmus test for intelligence, basically implying that the machine cannot be intelligent unless we believe that it is. We see this same self-importance with how we humans view other forms of intelligence already observable on the planet.

Our big-headedness may not even be intentional though; our bias is built into our words. According to Oxford, the word artificial comes from the Latin artificialis or artificium meaning ‘handicraft’ or ‘workmanship’. It is an adjective that means:

- made or produced by human beings rather than occurring naturally, especially as a copy of something natural.

- (of a person or their behavior) insincere or affected.

While some may argue that this isn’t the intention of the word in context, it is the second definition where arrogance seeps into our language. Whether we know it or not, due to the connotations and associations of the word artificial, by calling this new form of intelligence artificial, we are essentially calling it fake. When you start to look at the synonyms for this word: faux, feigned, phony, you begin to recognize that we are subconsciously associating artificial things with cheap imitations that are inherently inferior. Again, a case of capping AI’s potential and putting ourselves on a pedestal.

By using the word artificial, we also center the role of human contributions in the story of AI, claiming ourselves as inventors rather than explorers or discoverers. Some theorists have argued that AI is truly a greater intelligence that precedes us, and we are just now developing the technology to be able to perceive and interact with it. Much like technology of the past allowed us to explore the oceans and outer space, today’s computer tech is allowing us to explore previously uncharted intelligence.

The second word in question, intelligence, is a noun that seems to cause a bit of controversy. It is defined as:

- the ability to acquire and apply knowledge and skills.

Which is fair enough. But when diving deeper and try to define knowledge, we find that again we put ourselves in the middle, describing it as:

- facts, information, and skills acquired by a person through experience or education

Does a dog not know its owner, even if simply as the person that feeds them? Are instincts not just knowledge passed down in time through evolution and biology? What does it mean to actually know something? This has been a big question in the field of AI for quite some time (see Chinese Room thought experiment).

The word ‘intelligence’ has roots in the early 16th century: from the Latin intelligent meaning ‘understanding’, derived from the root words inter meaning ‘between’ and legere meaning ‘choose’. This etymology points to the essence of intelligence which is to be able to use information to choose between potential paths. To be able to discern between objects and alternatives. To play an active role engaging with one’s environment.

With this definition in mind, we can more clearly see the intelligence of the beaver that knows to build a dam for shelter and protection. Or fish that know to swim in schools in order to avoid predators. Or even the flower that knows to rotate and face the sun, for a little more food throughout the day.

There is a quote from Terence Mckenna that says ‘Animals are something plants invented to move seeds around.’ I love this quote because it follows a similar counterintuitive logic to those who say that cats and dogs figured out a way to get us to feed, shelter and tend to them, begging the question ‘who domesticated who?’ Statements like these shift your perspective and challenge the assumptions we often make without even realizing.

My personal opinion is that we could use a shift in perspective regarding AI; we need to humble ourselves and remove ourselves from the center of this narrative. A dear loved one and a very wise person proposed that we advocate to move from the term AI to ATOI, A Type Of Intelligence. This adjustment fully acknowledges the intelligence we are dealing with as authentic and frames it as though we are coming into contact with it rather than inventing it. I believe that this humility will allow us to learn like children do, as explorers and adventurers, learning more about ourselves and our environment through this journey toward intelligent machines.

References

Toosi, A. et al. (2022) A brief history of AI: How to prevent another winter (A critical review). Available at: https://arxiv.org/pdf/2109.01517.pdf (Accessed: February 11, 2023).

Having recently completed my masters program in AI and Robotics, you can imagine how I responded to finding out that the credibility of my degree was in question. I was blindsided by the weight of the little ‘c’ in ‘MSc’ (which honestly always kind of bugged me). That one little letter makes a clear distinction between a masters in science and a masters in computer science. But just how different are the two fields? Can I call myself a real scientist?

Computer science is often defined as the study of computers and computational systems. But that doesn’t really tell us much. In the 2005 paper ‘Is Computer Science Science?’ (which sparked this spiral of doubt), Peter Denning defines it as the science of information processes and their interactions with the world. His definition defends the field of computer science against critics who claim that true science deals with the systematic study of the structure and behavior of the natural world through observation and experimentation, and thus the study of man made computers does not qualify. Additionally, there are people who contest that the principles of computer science truly belong to other fields such as physics, electrical engineering and mathematics.

Denning and others (like myself) subscribe to the belief that field of computer science encompasses a wide range of topics and can be divided into several sub-disciplines, including but not limited to:

- Algorithm design and analysis

- Theory of computation

- Programming languages and software engineering

- Computer systems and architecture

- Databases and information systems

- Artificial intelligence and machine learning

- Human-computer interaction and user interface design

- Graphics and visualization

- Computer networks and distributed systems

Computer scientists use the principles and techniques of computer science to design, implement, and evaluate computational systems and applications.

Today there is a great deal of excitement around AI research, specifically in areas like natural language processing and computer vision. But much like at the time of Denning writing his paper, there is still a credibility problem in the field of computer science due to constant overhype and missed projections. For instance, people have been claiming that they would soon build artificially intelligent systems that would rival and replace human experts since the 1960s.

There is still a tendency to let excitement around new discoveries build up to unwarranted hype and overly optimistic projections for computing milestones. Nonetheless, computer science has a significant impact on a wide range of industries, such as healthcare, finance, transportation and manufacturing. And to be fair, given enough time, the field seems to make good on even its most ambitious claims and pursuits. It can be argued that the large language models of today pass the Turing test, achieving human level with natural language text generation. There have also been remarkable breakthroughs in science and medicine due to computer science, from genome editing to protein folding.

While some may argue that the media and marketers have infiltrated the research space of computer science, it is tough to say with all the studies being done around computing today that it is not a science.

Perhaps the most impressive thing about computer science is that It is constantly forming relationships with other fields; overlapping with the physical, social, and life sciences, opening new research fields. Examples of these overlaps include bioinformatics, biometrics, neural computing, and quantum computing.

For this reason, regardless of how one feels about its status as a true science, computer science is considered one of the most versatile and in-demand fields of study today.

References

Denning, P. (2005) Is computer science science?, ifba.edu.br. Available at: http://www.ifba.edu.br/professores/antoniocarlos/cacmApr05.pdf (Accessed: January 30, 2023).